Power Your GenAI Apps & Agents with Real-Time Context

Fresh data, rapidly materialized views, and intelligent pipelines that feed agents & LLMs so they stay accurate, grounded, and efficient.

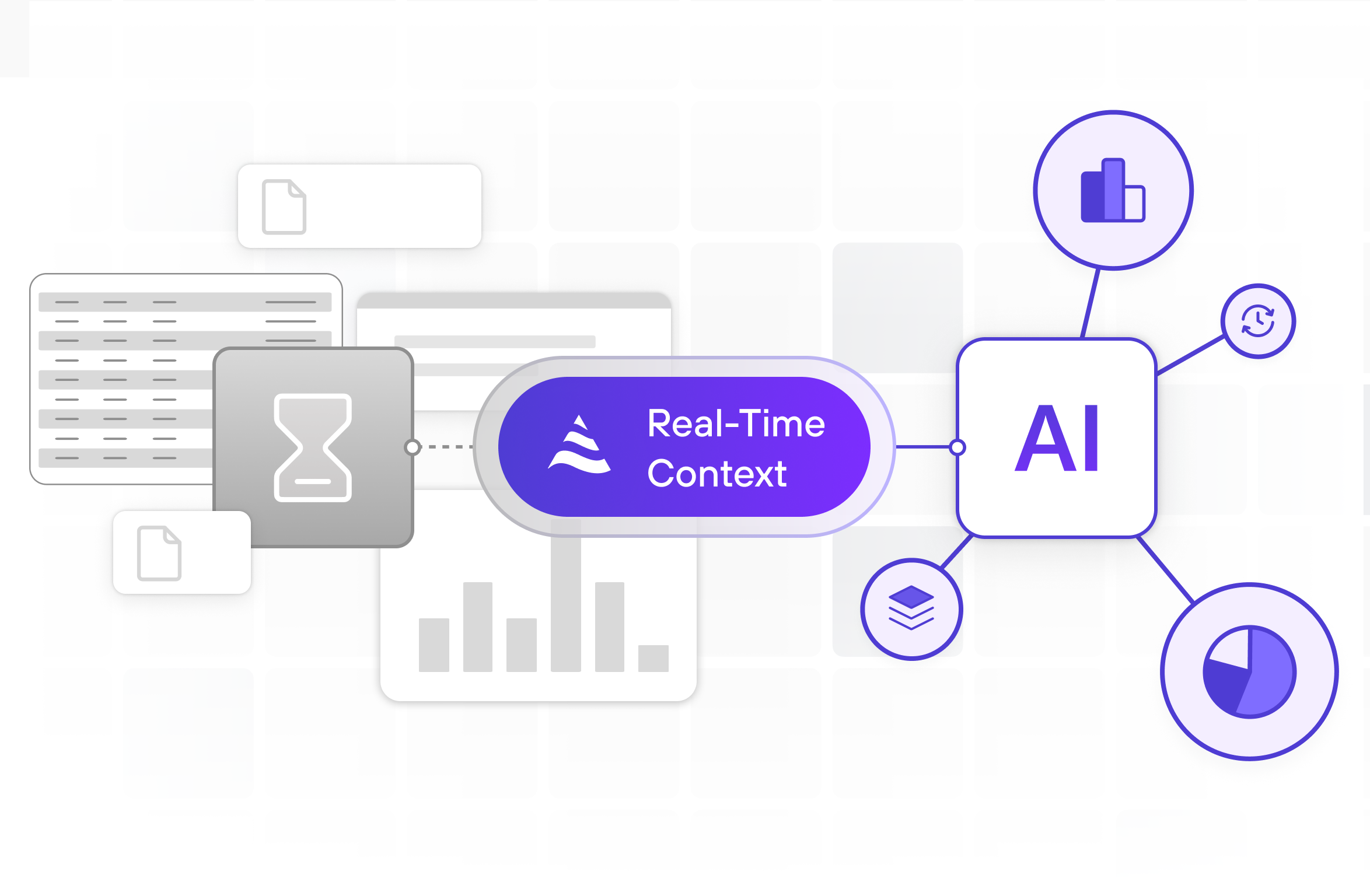

Real-Time Context

Up-to-the-second context for agents and applications.

Intelligent inference pipelines

Trigger actions, alerts, decisions without waiting for batch windows.

Cost Control

Only call expensive LLMs when necessary via smart gating.

Built for scale

Integrate logs, events, embeddings, metadata from any source; serve via MCP/API/direct lookup.

AI Then vs Now

AI with Stale Data

-

Hallucinates because context is outdated

-

Misses critical real-time events

-

Frustrates users with wrong answers

-

Wastes money on irrelevant LLM calls

AI with Fresh Data (DeltaStream)

-

Grounded in real-time materialized views

-

Detects and responds to events instantly

-

Boosts user trust and satisfaction

-

Optimizes spend by calling LLMs only when needed

High ROI, Real World Use Cases

-

Real-Time Vector Embeddings

Companies implementing real-time embeddings reduce hallucination rates by up to 40% and improve query relevance, driving higher customer satisfaction and lowering operational risk.

-

Customer Profile Streaming

Real-time streaming of customer profiles enables personalized responses that boost conversion rates by 15–20% and reduce support resolution times by over 30%.

-

Retail Personalization

Streaming product, pricing, and inventory data in real time helps retailers cut cart abandonment by 25% and increase average order value through more relevant recommendations.