19 Sep 2025

Min Read

Your GenAI Is Flying Blind. It’s Time to Give It Real-Time Vision

Table of contents

- The Stale Context Gap in GenAI: Why Real-Time Data Matters

- Real-Time RAG Explained: Keeping Knowledge Fresh

- When is Real-Time RAG Not Enough?

- The Solution: A Real-Time Context Engine

- Step 1: Fuse Live Data into Actionable Context

- Step 2: Serve Live Context to Your Agent

- From Static Answers to Aware Agents

- The Future of AI Is Context-Aware

As of 2025, many organizations have built or are piloting GenAI applications powered by Retrieval-Augmented Generation (RAG). RAG connects Large Language Models (LLMs) to proprietary data stored in a vector database, enabling them to answer nuanced questions about products, policies, and history.

But most of these systems share a hidden weakness: they’re often out of sync with reality.

Traditional RAG pipelines frequently depend on databases that are refreshed by batch ETL jobs running hourly or nightly. This introduces a stale context gap—your AI knows what happened yesterday, but not what just happened. For the next wave of AI agents that don’t just answer but act, that gap limits effectiveness and trust.

It’s time to close it. It’s time to give your AI real-time vision.

The Stale Context Gap in GenAI: Why Real-Time Data Matters

How much delay is acceptable depends on the domain. In customer support, a delay of hours may be fine. But in fast-moving domains, even a few minutes can lead to failure:

- An e-commerce bot promising an item is available when it sold out moments ago.

- A financial assistant recommending a trade without seeing breaking market news.

- A logistics agent routing deliveries without noticing a traffic jam that formed seconds earlier.

These breakdowns don’t happen because the LLM is weak. They happen because its context isn’t fresh.

Real-Time RAG Explained: Keeping Knowledge Fresh

In response, many teams have embraced Real-Time RAG. Instead of waiting for nightly or hourly updates, real-time RAG continuously pushes new documents into the vector database, keeping the retrieval index current within minutes or even seconds.

This solves part of the problem. The LLM now has access to the freshest documents and unstructured knowledge—from updated FAQs to breaking news articles.

But there’s a catch. Real-Time RAG is still document-centric. It struggles when the context needed is stateful or event-driven: the latest customer click, an order status change, a system alert, or a live market tick. Embedding every event as a “document” is inefficient and adds latency.

When is Real-Time RAG Not Enough?

Before architecting a more complex solution, it's crucial to know if you've outgrown standard RAG. You might need to move beyond a document-centric approach if:

- You're building proactive agents. You need the AI to act on a sequence of events (like user frustration signals), not just react to a user's query.

- User state changes frequently within a session. An e-commerce journey, a financial trade, or a SaaS user workflow involves many state changes that are critical context.

- Decisions depend on the now. The value of your AI's action diminishes sharply with every passing second, such as in fraud detection or supply chain logistics.

If this describes your use case, you need more than just fresher documents. You need live awareness.

The Solution: A Real-Time Context Engine

To give GenAI agents situational awareness, you need a new component at the core of your AI stack: a Real-Time Context Engine.

This engine continuously processes events as they happen, maintains queryable views of the latest state, and makes them available to your LLMs. Think of it as your AI’s central nervous system—ingesting live signals, transforming them, and feeding them into the “brain.”

This modern pattern unfolds in two steps:

Step 1: Fuse Live Data into Actionable Context

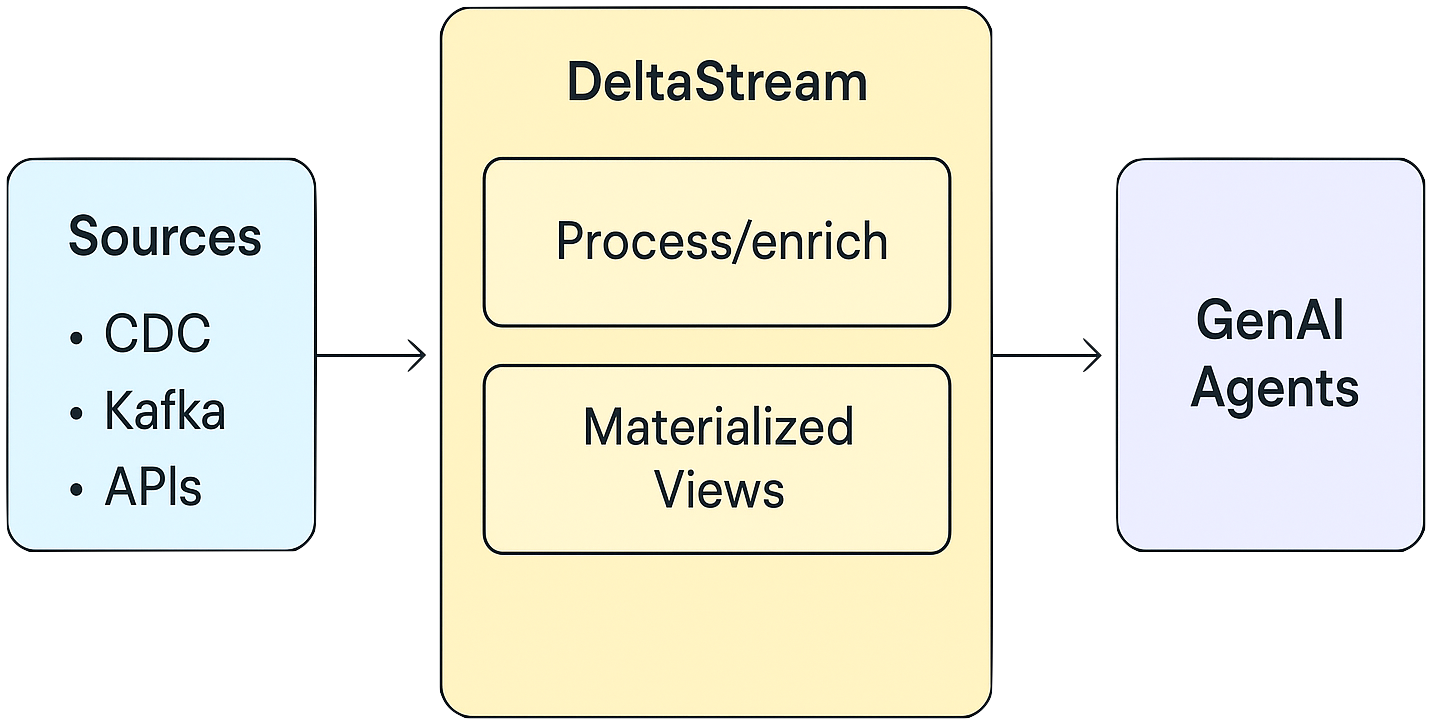

Instead of relying only on documents or batch pipelines, create a continuously updated view of your business reality directly from the source. A streaming context engine (such as DeltaStream) captures data as it arrives:

- Event Streams: Kafka, Kinesis, Pub/Sub

- Database Changes via CDC: Postgres, MySQL, DynamoDB

- External Signals: APIs, cloud services

Using declarative SQL, you can filter, enrich, and join these feeds into real-time materialized views. These views stay synchronized within seconds, offering a unified and accurate picture of what’s happening now.

Example:

This view continuously blends customer attributes, clickstream activity, and ticket status into a single table that always reflects the present moment.

Step 2: Serve Live Context to Your Agent

With these views in place, your GenAI application can retrieve both the freshest documents (via real-time RAG) and the latest system state (via real-time context engine such as DeltaStream).

The backend calls the materialized view API, injects the live context into the prompt, and the LLM responds with both knowledge and awareness.

From Static Answers to Aware Agents

This architecture doesn’t replace RAG — it extends it. You get the best of both worlds:

- Static Knowledge (RAG / Real-Time RAG): documents, policies, manuals.

- Live Awareness (Streams): user activity, system state, external signals.

User Query:

“I’m having trouble with my pro-plan integration.”

Context provided to the LLM:

- Real-Time RAG: “The pro-plan integration guide describes a three-step API setup process…”

- Real-Time Context: “User 123 is on the pro-plan, last clicked dashboard.html 30 seconds ago, and has an open ticket #5678 marked ‘pending agent reply’.”

LLM Response:

“I see you’re on the pro-plan and already have an open ticket (#5678). I’ve flagged it for priority. Meanwhile, step 3 of the setup guide may help resolve the issue immediately.”

That’s not just an answer — that’s an aware, adaptive agent.

The Future of AI Is Context-Aware

Real-Time RAG keeps your knowledge fresh. A Real-Time Context Engine keeps your agents aware. Together, they close the stale context gap — but it’s the context engine that enables AI to operate with human-level responsiveness.

The gap between knowledge and action is closing. With real-time context engines like DeltaStream, it’s time to build AI that lives in the now.

This blog was written by the author with assistance from AI to help with outlining, drafting, or editing.