29 Mar 2023

Min Read

What is DeltaStream and When Should You Use It?

As the creator of ksqlDB at Confluent, I know first hand the power of stream processing, but also understand its limitations and complexities. I founded DeltaStream to build a comprehensive platform that truly revolutionizes stream processing. The platform we built is based on three themes:

- A complete stream management and processing solution to enable users to see value in minutes while driving down operating costs.

- Provide a familiar and unified view of streams in leading streaming storage services (i.e., Kafka or Kinesis)

- Build a security model that elegantly enables and restricts access to data streams

What is DeltaStream?

DeltaStream is a unified serverless stream processing platform that integrates with streaming storage services including Apache Kafka and AWS Kinesis, Confluent Cloud, AWS MSK and Redpanda. Think about it as the compute layer on top of your streaming storage. It provides functionalities of streaming analytics(Stream processing) and streaming databases along with additional features to provide a complete platform to manage, process, secure and share streaming data.

DeltaStream provides a SQL based interface where you can easily create stream processing applications such as streaming pipelines, materialized views, microservices and many more. It has a pluggable processing engine and currently uses Apache Flink as its primary stream processing engine. However, DeltaStream is more than just a query processing layer on top of Kafka or Kinesis. It brings relational database concepts to the data streaming world, including namespacing and role based access control enabling you to securely access, process and share your streaming data regardless of where they are stored. Unlike existing solutions that mainly focus on processing capabilities, DeltaStream provides a holistic solution for both processing and operating/managing your streaming data.

Here’s a summary of DeltaStream’s main capabilities that make it uniquely suited for processing and managing data streams:

- DeltaStream is serverless. The user no longer has to worry about clusters/servers, architecting or scaling infrastructure to run real-time applications. Gone are the days of cluster sizing, keeping track of which cluster queries run in or how many tasks to allocate to your applications. Unlike many platforms that run multiple queries in one cluster and share cluster resources, queries in DeltaStream run in isolation, can scale up/down independently and seamlessly recover from failures! DeltaStream takes care of all those complexities so you can focus on building the core products that bring value to you and your organization.

- SQL as the primary interface. SQL is the primary interface for DeltaStream. From creating databases and streams, to running continuous queries or building materialized views on these streams, you can do it all in a simple and familiar SQL interface. DeltaStream provides SQL extensions that enable users to express streaming concepts that don’t have equivalents in traditional SQL. Additionally, if your compute logic requires more than SQL, you can use DeltaStream’s UDFs/UDAFs to define and perform such computations.

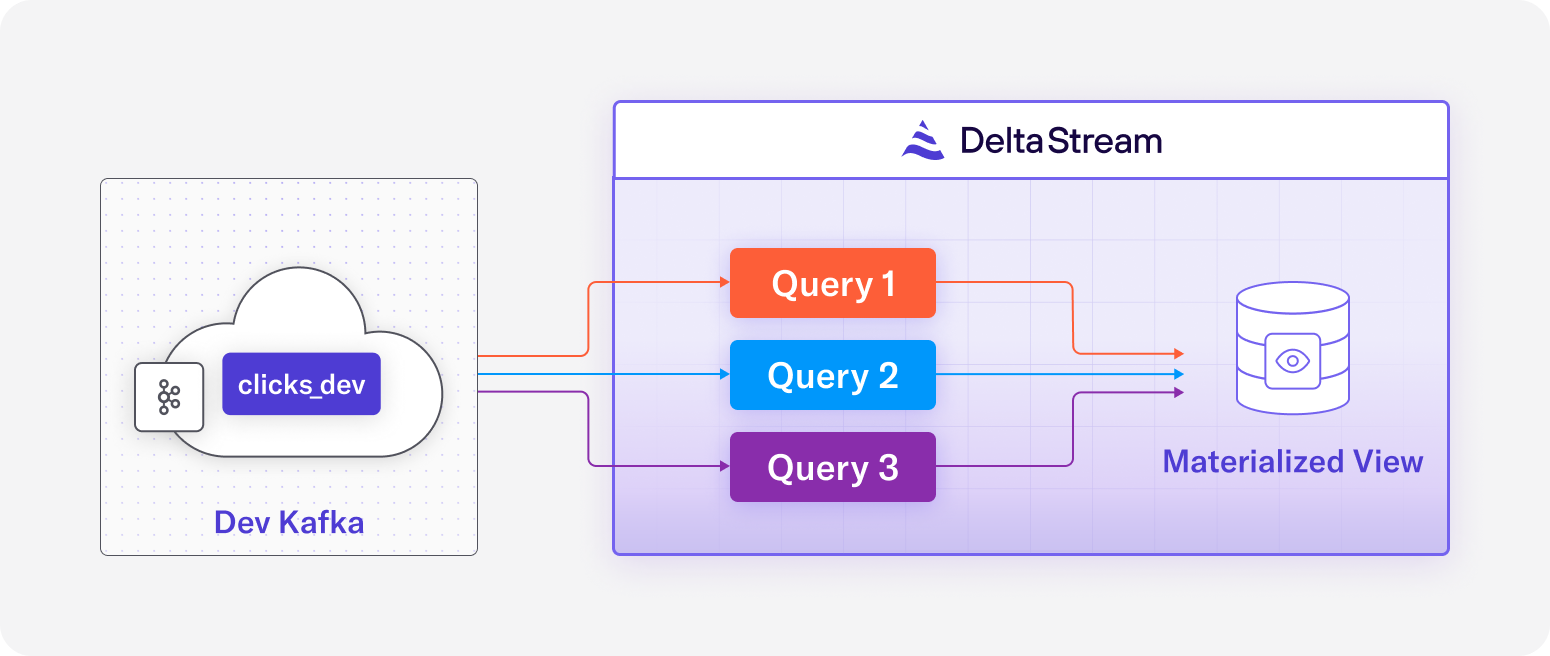

- Always up-to-date Materialized Views. Materialized View is a native capability in DeltaStream. You can build “always up-to-date” materialized views by using continuous queries. Once a materialized view is created, you can query it the same way you query materialized views in relational databases!

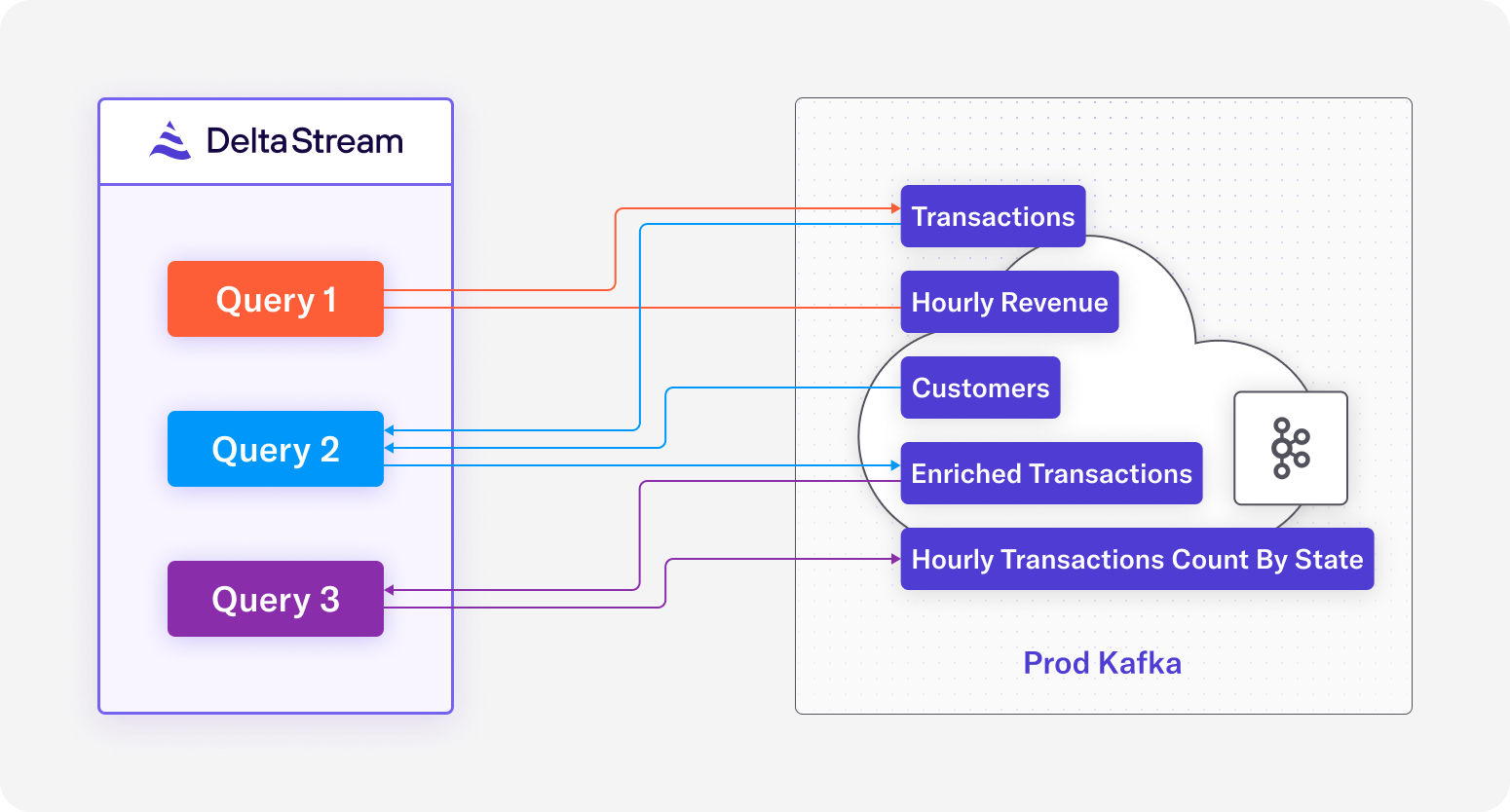

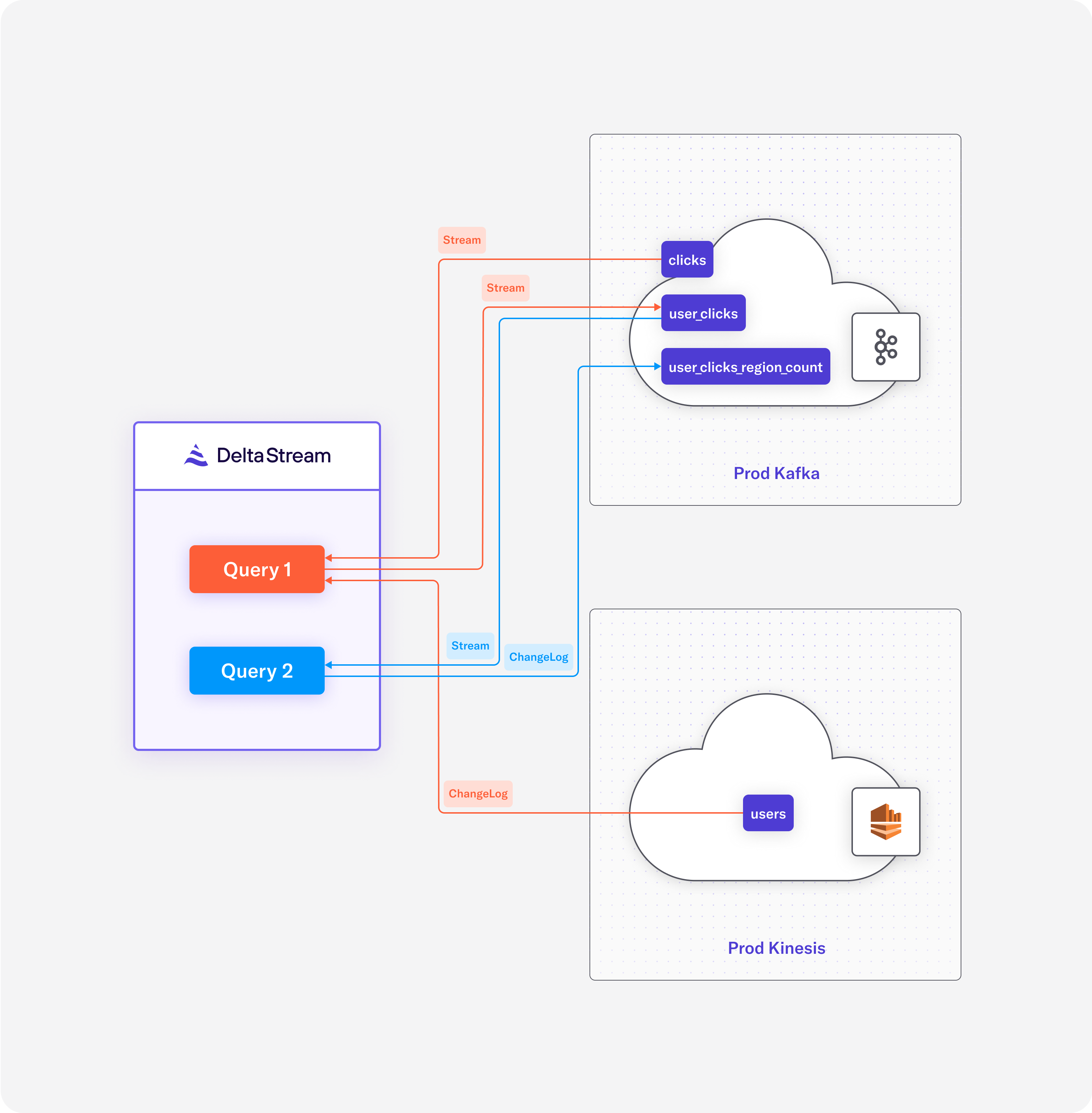

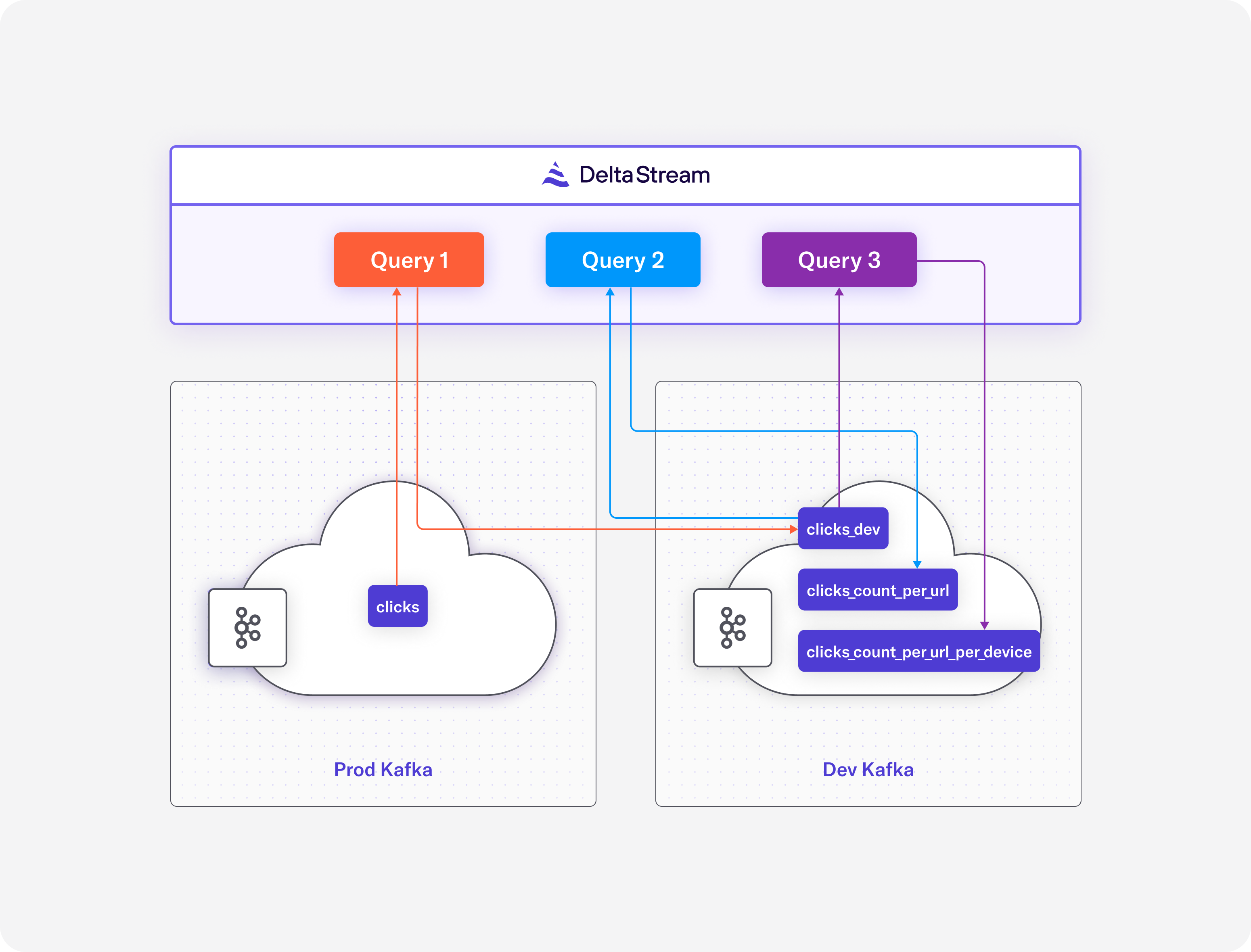

- Unified view over multiple streaming stores. DeltaStream enables you to have a single view into all your streaming data across all your streaming stores. For example, whether you are using one Kafka cluster, multiple Kafka clusters, or multiple platforms like Kafka and Kinesis, DeltaStream provides a unified view of the streaming data and you can write queries on these streams regardless of where they are stored.

- Intuitive namespacing. Streaming storage systems such as Apache Kafka have a flat namespace – you can think of this as a file system with no folders! This makes it very challenging to organize streams in such systems. By providing namespacing, DeltaStreams enables users to organize their streams in databases and schemas, similarly to the way they organize their tables in relational databases. And with storage abstraction described above, you can organize your streaming data across all your streaming storage systems.

- Fine-grained security that you know and love. You can define fine-grained access privileges to determine who can access and perform which operations on objects in DeltaStream. With DeltaStream’s role based access control(RBAC) you can define roles and assign them to users. All these can be done in SQL that you know and love. For instance, you can give read privileges on a specific stream to a given role with a one-line statement!

- Break down silos for your streaming data with secure sharing. With the namespacing, storage abstraction and role based access control, DeltaStream breaks down silos for your streaming data and enables you to share streaming data securely across multiple teams in your organizations.

- Push notifications. You can create notifications on results of your continuous queries and push them to a variety of services such as slack, email or pagerduty, or have the results call custom APIs. For instance, consider you have a stream of sensor data from vehicles. You can have a query to compute the average speed of each vehicle and if the average is higher than a threshold for a given time window, send a notification to the driver.

How Users Interact with DeltaStream

Users can interact with DeltaStream through its REST API, a web application or CLI. The following figure shows a screenshot of the DeltaStream web application. Also, using our REST API, you can have your own application call the API or tools like GitHub Actions submit a set of statements that define an application or pipeline.

When should you use DeltaStream?

With the aforementioned capabilities, you can quickly and easily build streaming applications and pipelines on your streaming data. If you are already using any of the streaming storage services such as Apache Kafka and AWS Kinesis, Confluent Cloud, AWS MSK or Redpanda, you should consider using DeltaStream. Here are a couple of use cases that you can use DeltaStream for.

Assume you have a vehicle information topic in your production Kafka cluster where you ingest real time information such as GPS coordinate, speed and other vehicle data. Consider you want to share this stream in real time with another team but only want to share information from vehicles in a certain geographic region and obfuscate some of the data. Also, you don’t want to give access to the production Kafka cluster and would like to provide the shared information in a topic in a new Kafka cluster. Using DeltaStream, you can easily write a SQL query, as the one shown below, to read the original stream, perform desired projections, transformations and filtering, and continuously write the result into a new stream backed by a topic in the new Kafka cluster that already exists called test_kafka.

Once you have the results stream, using the following statement, you can grant read privilege for the team. They would only see the result stream without even seeing the source stream or the production Kafka cluster.

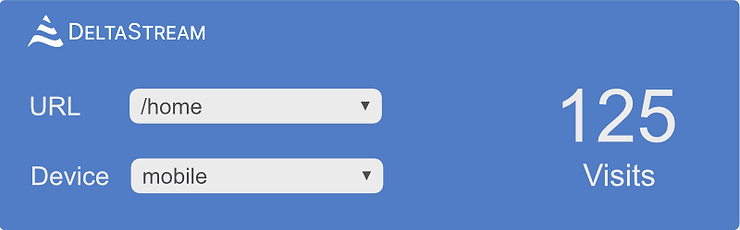

As another example, consider a wiki service where all user interactions with every wiki page is streamed into a Kinesis stream. Let’s assume we want to provide real time page statistics such as number of edits per wiki page. You can easily build a materialized view in DeltaStream using an aggregate query like the following:

This will create a materialized view in DeltaStream where we have the edit count per wiki page and every time an edit event is appended to the wiki_events stream, the view will be updated in real time. You can now show the up to date edit count for a wiki page every time it is loaded by querying the materialized view and including the edit count in the wiki page. DeltaStream ensures that every time the users open a wiki page, they will see the latest up to date edit counts for the page.

Now that you have seen some of the capabilities of DeltaStream along with a few example use cases, you should check out DeltaStream as the platform for processing, organizing and securing your streaming data. You can schedule a demo where you can see all these capabilities in the context of a real world streaming application! Browse our blogs for more in-depth information on features and use cases. Once you are ready, let’s get in touch to build your streaming applications with DeltaStream, the next generation serverless streaming platform