16 Jul 2025

Min Read

7 Ways to Slash Your Snowflake Costs with DeltaStream

Snowflake has become the cornerstone of modern data platforms, with its powerful warehousing capabilities, scalable architecture, and user-friendly interface. But as your data footprint grows, so do the costs.

Teams often find themselves surprised by large Snowflake bills, triggered not just by query volume but by how data is ingested and transformed. It’s easy to fall into the trap of using Snowflake for everything: landing raw data, running batch jobs, refreshing views, and executing transformations. This pattern may work, but it’s far from cost-efficient.

At DeltaStream, we believe in shifting left: processing data earlier in the pipeline, closer to the source. By pushing logic into the stream, you can dramatically reduce the amount of work and cost that lands in Snowflake. Here’s how.

1. Preprocess Before Loading into Snowflake

With DeltaStream, you can transform, enrich, and filter data in real time—before it’s landed into Snowflake. This means pushing computation to the left of your pipeline and reducing the volume and complexity of data stored and queried in the warehouse.

For example, instead of loading raw Kafka logs and applying transformations with Snowflake SQL, DeltaStream can pre-filter by error type, enrich with lookup data, and structure the result, sending only what’s relevant downstream.

Better yet, DeltaStream can write directly into Snowflake via Snowpipe Streaming, bypassing the warehouse entirely during ingest. This allows teams to avoid costly compute charges typically incurred when using COPY INTO or running ingestion through the warehouse itself.

Why it saves: Reduces storage costs, simplifies downstream queries, and shrinks warehouse compute needed for transformations. Plus, warehouse-less ingest through Snowpipe Streaming cuts ingestion costs by leveraging serverless architecture.

2. Avoid Full-Table Reprocessing

Many batch ELT pipelines reload entire datasets to account for changes, even when only a small subset of rows have been updated. With DeltaStream’s support for Change Data Capture (CDC), you can process only the inserts, updates, and deletes that matter.

Take this scenario, a customer order table with millions of rows might only change by 1–2% daily, but batch jobs often reprocess 100% of the data. Streaming CDC jobs skip this waste entirely.

Why it saves: Minimizes compute-intensive merge and deduplication operations, and reduces write I/O and overall warehouse consumption.

3. Eliminate Batch Refreshes

Dynamic tables in Snowflake are powerful, but costly to refresh, especially if they depend on large base tables. DeltaStream enables continuous view maintenance through streaming pipelines that automatically emit updated results in real time.

For instance, replace a daily batch job that refreshes a user engagement summary table with a DeltaStream pipeline that updates views with every event or session close.

Why it saves: No more triggering full queries over large datasets, you avoid costly refresh logic, and improve freshness at the same time.

4. Offload Transform Logic from Snowflake

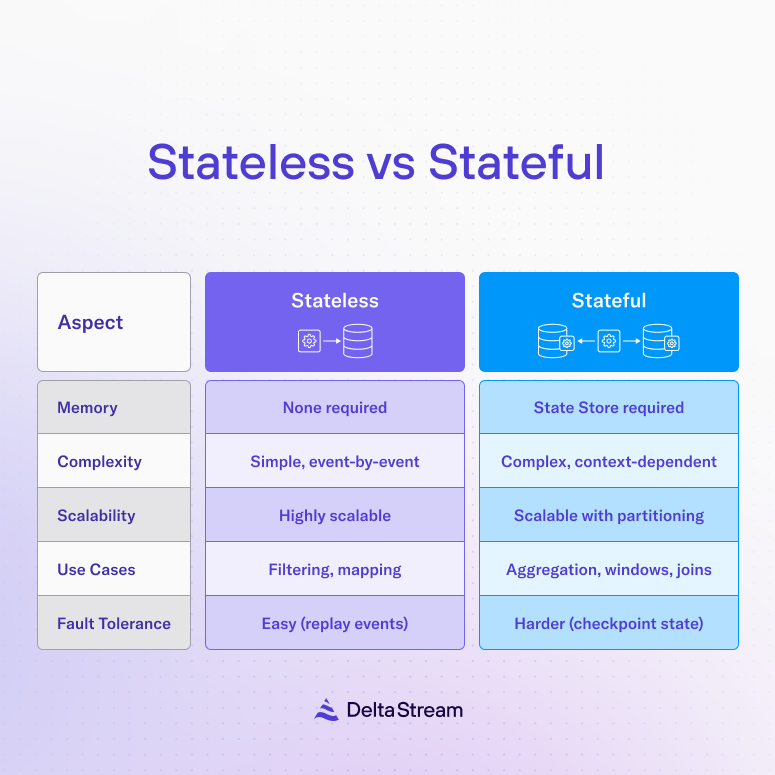

Snowflake is excellent for querying and analysis, but compute-intensive transforms (like aggregations, joins, or business logic) can drive up costs quickly. DeltaStream handles these operations in-stream, using Flink under the hood for highly efficient processing.

Oftentimes, many organizations will calculate rolling 30-day metrics in Snowflake via repeated queries. DeltaStream can maintain these in real time and push the ready-to-use aggregates directly.

Why it saves: Frees up your warehouse from expensive transformation jobs, letting you reserve compute for ad hoc analytics and BI dashboards.

5. Shrink Data Volumes Before Landing

Not all data deserves to be stored in its raw form, especially high-throughput sources like IoT, logs, or user interactions. DeltaStream can intelligently downsample, aggregate, or filter at the edge.

For example, a web analytics pipeline might sample traffic data or aggregate it into hourly rollups before persisting, rather than storing every individual click event.

Why it saves: Reduces long-term storage needs and lowers the cost of queries over large time windows.

6. Right-Size Your Snowflake Warehouse

Many teams overprovision Snowflake warehouses just to handle inefficient ELT jobs. But with DeltaStream doing the heavy lifting upstream, those pipelines shrink—and so can your warehouse.

A pipeline that once required a Snowflake XL or 2XL warehouse to handle full-table refreshes may now run perfectly on an M or L-sized warehouse thanks to reduced data and simplified logic.

Why it saves: Directly cuts your Snowflake compute bill by using fewer credits per query and scaling back on autoscaling thresholds.

7. Consolidate Your Architecture

Fragmented pipelines—mixing Airflow, Spark, Kafka Streams, and Snowflake—are costly to operate and maintain. DeltaStream provides a unified streaming-first platform where ingestion, transformation, and delivery happen in one place.

A customer replaced 6 independent micro-batch jobs with a single DeltaStream pipeline that continuously syncs, transforms, and delivers to Snowflake in real time. And with built-in support for Snowpipe Streaming, DeltaStream can deliver the final output directly to Snowflake tables—without invoking warehouse compute.

Why it saves: Reduces the operational burden, eliminates redundant compute cycles, and ensures consistency without paying for orchestration and retries across tools. Snowpipe Streaming further simplifies ingest, reduces latency, and shrinks your Snowflake footprint by eliminating the need to scale up warehouses just to land data.

Benchmark: Real-World Cost Savings

We put our claims to the test by benchmarking traditional Snowflake ELT pipelines against streaming ETL powered by DeltaStream. The results were clear:

- 🚀 Up to 75% lower Snowflake compute and storage costs

- ⚡ Sub-second freshness across operational and analytical use cases

- 🔟 10x lower warehouse utilization during high-throughput workloads

These savings aren’t hypothetical—they reflect how smart architectural shifts upstream can directly translate to real dollars saved inside your Snowflake account.

Want to dive deeper into how we ran out benchmark? Read the blog!

Want to see what you could save?

Schedule a Streaming Maturity Assessment—a quick, six-question self-evaluation with DeltaStream CEO and ksqlDB creator Hojjat Jafarpour. You’ll receive:

- A maturity score and assessment of your current Snowflake cost posture

- A personalized action plan with quick wins and long-term upgrades

- Architecture recommendations to reduce cost without sacrificing performance

Start optimizing your Snowflake spend today.