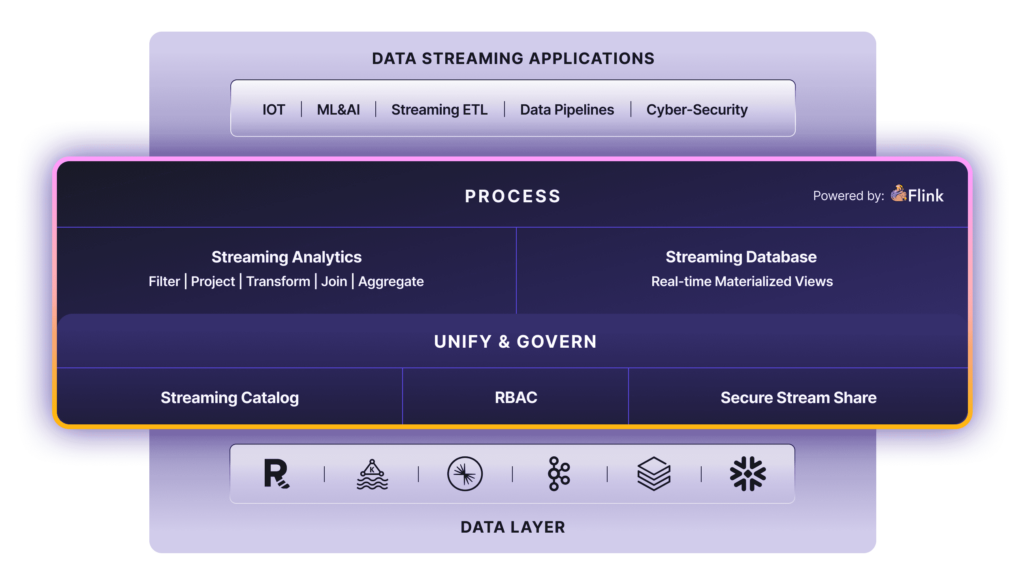

powered by Apache Flink®

The Complete Stream Processing Platform

Unify, Process and Govern all your data streams. Enable your entire organization to harness the power of your streaming data.

Unify, Process and Govern all your data streams. Enable your entire organization to harness the power of your streaming data.

Decide how we deploy

Power of the Platform

Use Cases

All the tools and features you need for whatever your data demands.